When I first started managing projects on GCP, I quickly realized that clicking through the console didn’t scale. Each change felt like a one-off task that was hard to track and impossible to reproduce. That’s when I began using the Terraform GCP Provider.

Also called the Google provider, it connects Terraform to Google Cloud. Instead of writing API calls, I could define infrastructure once and deploy it consistently across environments.

The shift brought immediate benefits: automation through CI/CD pipelines, version-controlled infrastructure in Git, and the ability to scale changes safely across teams. What used to be manual and error-prone became repeatable and auditable.

5 Best Practices for Terraform GCP Provider

In practice, the GCP Provider became the bridge between my Terraform configurations and Google Cloud’s APIs. It turned infrastructure management into a process that was consistent, automated, and resilient. Here my 5 top tips to you

1. Managing GCP Resources with Terraform GCP Provider

Let’s examine some of the best practices for managing GCP resources with the Terraform GCP Provider on Terraform Google Cloud

a. Least-Privilege Service Accounts

When provisioning resources with Terraform, it should use a service account that has the necessary permissions to perform actions on the GCP project. You can have dedicated service accounts for Terraform with limited authorization. For instance, provide Terraform only enough authorization to create Compute Engine resources within one project if your .tf only provisioning that. You can add more permissions as your IaC evolves.

b. Project Segmentation

Your organization may be working on multiple software products owned by different teams. These applications could have multiple environments. You can organize GCP projects by environment and/or by team. This isolates resources, simplifies access control, and aids cost tracking. For instance, create separate projects, such as myapp-dev and myapp-prod, if you are creating projects per environment.

c. Labeling for Cost Awareness

Tag resources with labels for better cost allocation. Correctly labeling your infrastructure will help you track your costs accurately in GCP’s billing reports.

resource "google_compute_instance" "instance1" {

name = "my-vm"

machine_type = "e2-micro"

labels = {

env = "dev"

team = "team1"

owner = "controlmonkey"

}

# ... other configurations

}2. Managing State Files with Terraform GCP Provider

The Terraform state file contains the current state of your infrastructure. Terraform requires information in the Terraform state to identify the resources it manages and plan actions for creating, modifying, or destroying resources.

Storing it locally is risky collaborative teams can overwrite it, and it’s not encrypted by default. Instead, you can use a remote backend to host your state file. When using GCP, a popular option is to use GCP Cloud Storage to version, encrypt, and store your Terraform state. You can control access to the state using IAM permissions.

Let’s see our setup so far

terraform {

required_version = ">= 1.3"

required_providers {

google = {

source = "hashicorp/google"

version = "6.47.0"

}

}

backend "gcs" {

bucket = "cmk-terraform-state-bucket"

prefix = "dev/networking"

}

}

provider "google" {

credentials = file(var.credentials_file)

project = var.project_id

region = var.region

}Make sure you have enabled encryption and versioning on your GCS bucket. GCS backend supports state locking (Concurrency Control) natively.

3. Modularizing Terraform GCP Provider Code

Terraform modules make your code DRY (Don’t Repeat Yourself) and accelerate deployments. You can start by identifying common patterns in your existing infrastructure and converting them to modules.

For instance, you can create generic compute, networking, storage, and security config modules. Pass that type of module, parameterized with variables, and reuse across multiple projects or environments within the same project.

Consider the following when you modularize your Terraform code:

- Store your module code in a separate repository and manage it using version control. Tag releases in a consistent manner.

- When using third-party modules, opt for well-documented modules from reputable registries.

- Use variables and locals to parameterize your Terraform modules. Add variable validations and defaults to fit your most common use cases.

- Document your modules!

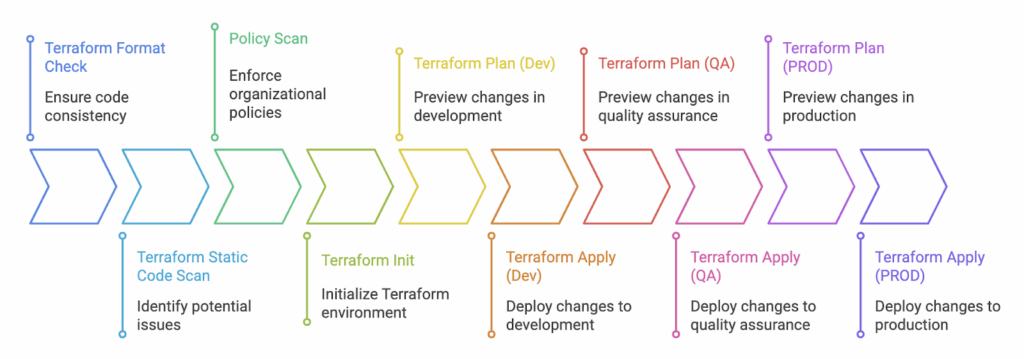

4. Optimizing DevOps with Terraform GCP Provider

Automation is crucial for effectively managing cloud infrastructure. It reduces manual efforts and significantly improves deployment frequency and speed. Automating Terraform provisioning actively resolves state lock conflicts, permission issues, and speeds up provisioning with cached modules. You can bake steps such as static code scanning, format checks, and drift detection into your automations.

Many headaches, such as state lock conflicts and permission issues, can be circumvented when using automation with Terraform. Additionally, pipelines maintain detailed logs. It helps you to track changes and pinpoint when they occurred.

For simplicity, you can use a managed CI/CD service such as Google CodeBuild.A simple automation of Terraform would be checking formatting, config verification, planning, and applying changes. Here is a sample minimal codebuild.yml

steps:

- id: 'terraform init'

name: 'hashicorp/terraform:1.0.0'

script: terraform init

- id: 'terraform plan'

name: 'hashicorp/terraform:1.0.0'

script: terraform plan

- id: 'terraform apply'

name: 'hashicorp/terraform:1.0.0'

script: terraform apply --auto-approveConsider including the following steps or integrations when setting up your automations;

- Add format checks: Terraform has an in-built terraform fmt command that you can use to validate the configuration.

- Validate configurations: You can use the terraform validate command to validate the static HCL configuration files.

- Incorporate Static Code Analysis: Utilize tools such as Checkov and TFSec with any CI/CD tool to identify known security issues in your Terraform configurations.

- Integrate Policy Checks: Policy-as-code tools, such as Open Policy Agent (OPA), can check configurations against organizational policies.

- Gated Promotions: Deploy to a dev project, test in staging, and promote to prod after approval.

- Integrate Drift Detection: Identify when actual infrastructure changes outside your automations. A simple Terraform plan that runs periodically can help you with this. Tools such as ControlMonkey provide advanced drift remediation capabilities.

5. Troubleshooting Terraform GCP Provider Issues

Sometimes, you may encounter unexpected errors with Terraform when using it on GCP. Some of them are from the Terraform GCP (Google Cloud Platform) provider, which we will examine in this section.

- API Quota Errors: GCP Provider translates your code into API requests. GCP has specific quotas on the number of requests it will serve within a given time frame. You may at times notice errors in the form of 429 Too Many Requests. In such cases, check quotas in GCP’s Console (IAM & Admin > Quotas) and request an increase. To reduce the load, you may also consider reducing Terraform’s parallelism.

terraform apply -parallelism=3

- IAM Binding Errors: Terraform should have permission to create, modify, and delete resources you declare in your Terraform scripts. Verify the service account you use for Terraform has the necessary roles required to provision your infrastructure. For example, to provision

GKE, the role roles/container.admin would be required. - Errors from Deleted GCP Resources: When you remove resources without using Terraform, it will generate errors because those resources remain listed in the state. terraform state rm

<resource_type>.<resource_name> - Debudding:

- You may encounter different errors or warnings when applying Terraform. It would be helpful to know what Terraform is doing underneath, so you can precisely pinpoint the issue.

- Consider setting the Terraform log level to get detailed output on Terraform’s actions. You can enable this by setting the environment variable.

TF_DEBUG=debug.

Conclusion

The Terraform GCP Provider is the bridge between your code and Google Cloud APIs. By using best practices, you can create secure, scalable, and strong GCP environments. These practices include least-privilege accounts, remote state, modular code, and automation.

Start small, experiment, and grow with confidence. AIf you need to manage Terraform on a large scale, platforms like ControlMonkey provide guardrails. They also offer drift detection and compliance enforcement right away.

Book a demo with ControlMonkey to see how we simplify Terraform on Google Cloud.