“We adopted Terraform to gain control — not to overspend Cloud budget” – That’s what I hear every other week from Cloud leaders managing working AWS environments. Despite using Infrastructure as Code, many teams still struggle with Terraform AWS cost optimization due to invisible drift, inconsistent practices, and a “just ship it” mindset. Even with IaC in place, teams still overprovision, forget cleanup, or misconfigure storage — and AWS bills grow quietly.

The good news? Terraform can be a cost control engine – if you use it intentionally.

I wrote a playbook of 11 real-world Terraform strategies to cut AWS costs without sacrificing scale that I used in the past in Spot.io and i saw from our leading customers working on daily level with Terraform AWS provider.

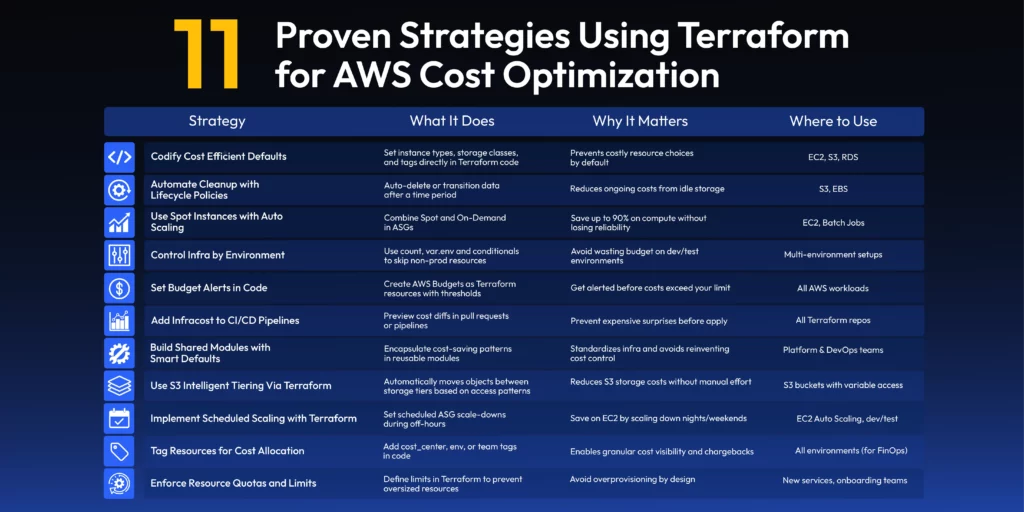

TL;DR: Use Terraform as Your AWS Cost Optimization Engine

Codify infrastructure, automate cleanup, right-size resources, and enforce cost policies all with Terraform.

The visual below shows 11 proven ways to cut your AWS bill using Terraform — from codifying defaults to enforcing budgets and reviewing cost diffs in CI/CD.

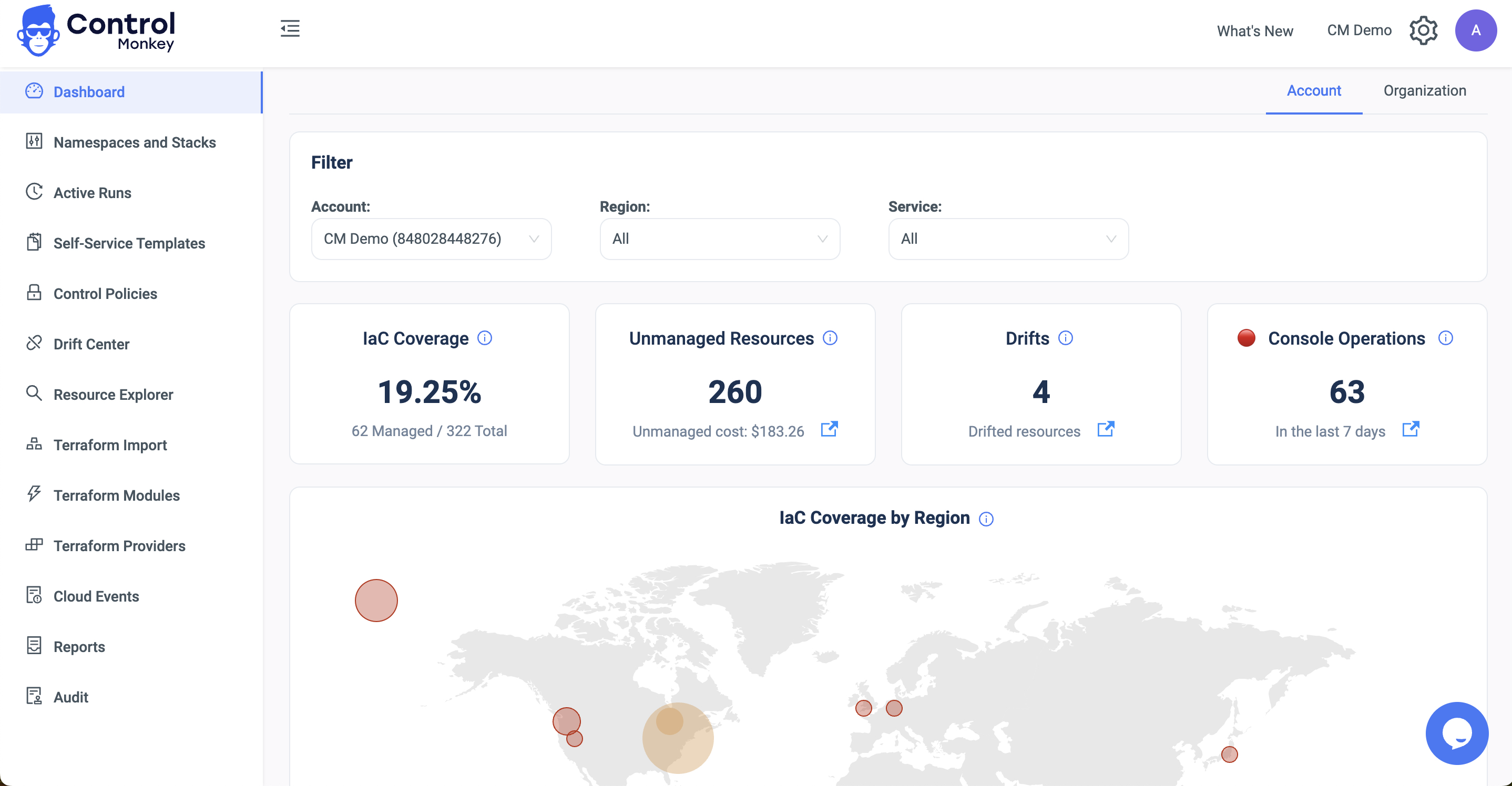

Paired with ControlMonkey, you get real-time drift detection, usage visibility, and guardrails to prevent overspend before it happens.

How Terraform Enables AWS Cost Optimization at Scale

Terraform, as an Infrastructure as Code (IaC) tool, provides a consistent and automated way to manage AWS infrastructure. Its main purpose is to provide and manage resources clearly. It also helps save costs in AWS by ensuring predictable, efficient, and clear infrastructure patterns.

Below are seven Terraform-based techniques that help reduce AWS costs without compromising performance or scale:

1️⃣ Codifying Cost-Efficient Infrastructure

With Terraform, every AWS resource is defined in code. You can directly include cost-saving practices in the Terraform configuration. These practices include instance right-sizing, minimal retention policies, and tag-based chargeback methods. For example:

|

1 2 3 4 5 6 7 8 9 10 |

resource "aws_instance" "web_server" { instance_type = "t3.micro" # Cost-effective burstable instance ami = "ami-0abcdef1234567890" count = var.deploy_web ? 1 : 0 # Conditional deployment tags = { Name = "WebServer" Environment = var.env CostCenter = var.cost_center } } |

This approach ensures that developers cannot unknowingly deploy expensive resources without explicit intent and approval.

2️⃣ Enforcing Lifecycle Policies for Terraform AWS Cost Optimization

Terraform’s support for AWS lifecycle configurations helps automate the cleanup of unused resources. For example, S3 buckets can be defined with intelligent tiering or lifecycle policies to transition or delete old data, which reduces storage costs

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

resource "aws_s3_bucket_lifecycle_configuration" "data_archive" { bucket = aws_s3_bucket.archive.id rule { id = "archive-rule" status = "Enabled" transition { days = 30 storage_class = "GLACIER" } expiration { days = 365 } } } |

3️⃣ Use Spot Instances and Auto Scaling with Fallbacks

Another effective strategy for reducing AWS costs is to leverage Spot Instances for workloads that are not time-sensitive. Spot instances can be up to 90% cheaper than On-Demand instances, making them ideal for compute-heavy workloads that don’t require the computer to be always available. Terraform allows you to combine Spot and On-Demand instances in Auto Scaling Groups to maximize cost savings while ensuring high availability.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

resource "aws_autoscaling_group" "spot_asg" { min_size = 1 max_size = 5 desired_capacity = 3 vpc_zone_identifier = var.subnet_ids mixed_instances_policy { launch_template { launch_template_specification { launch_template_id = aws_launch_template.spot.id version = "$Latest" } overrides { instance_type = "m5.large" } overrides { instance_type = "m5a.large" } } instances_distribution { on_demand_base_capacity = 1 on_demand_percentage_above_base_capacity = 20 spot_allocation_strategy = "lowest-price" } } } |

4️⃣ Environment-Specific Resources with Terraform

Managing costs across multiple environments (dev, staging, production) can be tricky. However, Terraform provides a simple way to avoid provisioning unnecessary resources in lower environments by using conditional resource creation. For example, you do not need to set up costly RDS instances or large EC2 instances for development or testing. Using conditional logic in Terraform allows you to deploy such resources only in production environments.

|

1 2 3 4 5 6 7 8 9 |

variable "env" { type = string default = "dev" } resource "aws_rds_instance" "production_db" { count = var.env == "prod" ? 1 : 0 ... } |

This strategy helps a fintech company save money on database costs in non-production environments. It can save hundreds of dollars each month.

5️⃣ Terraform Budget Alerts for AWS Cost Optimization

One of the most effective ways to control costs in your Terraform AWS cost optimization playbook is to monitor cloud spend in real time — before it spirals. Terraform can help you automate cost alerts using the AWS Budgets service. Setting up budget notifications allows you to receive alerts whenever your costs approach a pre-defined threshold, allowing you to take corrective action before costs spiral out of control.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

resource "aws_budgets_budget" "monthly_limit" { name = "team-budget" budget_type = "COST" time_unit = "MONTHLY" limit_amount = "200" limit_unit = "USD" notification { threshold_type = "PERCENTAGE" threshold = 80 comparison_operator = "GREATER_THAN" notification_type = "ACTUAL" subscriber_email_addresses = ["cloud-costs@company.com"] } } |

A marketing agency could set an alert at 90% of their AWS budget. This helps them avoid going over their $200 monthly limit. The team can then take action before their AWS costs exceed the budget.

6️⃣ Use Infracost for Pre-Deployment Cost Estimation

In an organization with strict financial controls, knowing the expected costs of new infrastructure before deploying it is crucial. Infracost is an open-source tool that integrates with Terraform to provide cost estimates for your infrastructure changes, ensuring that you understand the financial impact before spinning up resources. By adding Infracost to your CI/CD pipeline, you can show cost estimates directly in your Git pull requests.

|

1 2 |

- name: Generate Infracost cost estimate run: infracost diff --path=. --format=json --out-file=diff.json |

For example, a big company using a careful DevOps pipeline could spot a $500/month cost increase. This could happen from an overprovisioned RDS instance. They can catch this issue before it goes into production. They just need to add a cost estimation step to their deployment process.

7️⃣ Use Terraform Modules with Cost-Aware Defaults

Terraform modules can be used to encapsulate common infrastructure patterns and ensure consistency across your organization. When building these modules, think about using cost-aware defaults. This includes choosing cheaper instance families, setting up auto-scaling, and creating lifecycle policies for storage. By doing this, you ensure that teams are consistently using cost-effective practices without having to think about it on a per-resource basis.

|

1 2 3 4 5 |

module "s3_logging" { source = "github.com/org/modules/s3" enable_versioning = true lifecycle_days = 30 } |

A cloud platform team could leverage such modules to automatically transition logs to INTELLIGENT_TIERING after 30 days, avoiding the manual effort of setting up these policies for every new project.

8️⃣ Reusable Resource Provisioning with Terraform Modules

Terraform’s modules allow for reusable configurations that ensure efficient provisioning of AWS resources. By standardizing resource creation, modules reduce the risk of misconfiguration and prevent resources that drive up costs or open security gaps.

For example, a reusable Amazon RDS module might provision database instances with cost-effective settings, selecting the right storage type and instance size based on expected query loads and availability requirements.

9️⃣ Infrastructure as Code (IaC) for Cost Control

Using Infrastructure as Code with Terraform helps automate resource creation, update, and deletion. This ensures that resources are only provisioned when necessary, preventing unnecessary idle infrastructure from raising costs. Version-controlled Terraform code also makes tracking changes and optimizing configurations easier over time.

For instance, you keep track of the resources you create and understand the difference whenever you introduce or change new resources, keeping the costs in check.

🔟 Managing Non-production Environments Efficiently

By managing non-production environments with Terraform, such as test environments, you create them only when required and destroy them upon usage.

This prevents testing and staging environments from incurring unnecessary costs when they are not required.

For example, the CI can use a Terraform configuration to provision resources to run tests and destroy them upon completion.

1️⃣1️⃣ Optimizing Multi-Region Deployments

Terraform allows you to optimize cross-region deployments by configuring resources only in regions where they are needed. This reduces unnecessary costs from cross-region data transfers and redundant resources.

For example, You can define region-specific providers in Terraform and deploy EC2 instances only in regions with significant demand, eliminating resources in low-demand regions.

For teams managing multi-region or multi-stack setups, exploring different approaches to infrastructure-as-code across platforms like Terraform and AWS CloudFormation can offer valuable insights into cost management strategies. This overview covers how some organizations approach cloud cost optimization using such tools.

Deep Dive: Applying Terraform Cost Strategies to EC2, S3, and Lambda

Now that we’ve covered 11 Terraform AWS cost optimization strategies to reduce AWS costs, let’s look at how to apply these best practices to specific AWS services — starting with EC2, S3, and Lambda. These services are often the biggest contributors to AWS spend, and implementing cost-aware Terraform configurations here can deliver immediate savings.

We’ll map the key strategies above – like lifecycle policies, instance right-sizing, budgeting, and conditional provisioning to real-world usage in these services.

Optimizing EC2 for Terraform AWS Cost Optimization

Applies to strategies:

- #1 Codifying cost-efficient infrastructure

- #3 Using Spot Instances and Auto Scaling

- #4 Environment-specific conditionals

- #11 Multi-region optimization

EC2 instances are often the most significant cost drivers. To optimize costs, Terraform provides the ability to use Spot Instances, implement Auto Scaling, and apply Reserved Instances (RIs) or Savings Plans.

- Spot Instances: For flexible workloads, Spot Instances can save up to 90% compared to On-Demand pricing. Terraform allows you to automate provisioning Spot Instances within Auto Scaling groups.

|

1 2 3 4 5 6 7 8 |

resource "aws_autoscaling_group" "web_app" { desired_capacity = 4 mixed_instances_policy { instances_distribution { spot_allocation_strategy = "capacity-optimized" } } } |

- Auto Scaling: Automatically adjusts EC2 instances based on demand. Terraform’s integration with Auto Scaling ensures you’re not over-provisioning resources during low-demand periods.

- Reserved Instances & Savings Plans: RIs and Savings Plans offer substantial discounts for predictable workloads. Terraform lets you reserve capacity for EC2 instances, locking in lower pricing over one- or three-year terms.

|

1 2 3 4 5 6 |

resource "aws_ec2_reserved_instances" "reserved" { instance_type = "t3.medium" availability_zone = "us-east-1a" duration = 31536000 instance_count = 2 } |

S3 Storage Optimization with Terraform on AWS

Applies to strategies:

- #2 Lifecycle automation

- #7 Modules with cost-aware defaults

- #9 Infrastructure as code for cleanup

Amazon S3 offers cost-saving options such as Intelligent Tiering, Lifecycle Policies, and Storage Class transitions. Terraform makes it easy to manage these features.

- S3 Intelligent-Tiering: Automatically moves data between frequent and infrequent access tiers based on usage patterns, optimizing storage costs.

|

1 2 3 4 5 6 7 8 9 10 |

resource "aws_s3_bucket" "example" { bucket = "my-bucket" lifecycle_rule { enabled = true transitions { days = 30 storage_class = "INTELLIGENT_TIERING" } } } |

- S3 Lifecycle Policies: Automate data transitions to lower-cost storage classes (e.g., Glacier) or set expiration rules to delete old data.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

resource "aws_s3_bucket" "example" { bucket = "my-bucket" lifecycle_rule { enabled = true transitions { days = 60 storage_class = "GLACIER" } expiration { days = 365 } } } |

Optimizing AWS Lambda Costs

Applies to strategies:

- #1 Cost-efficient resource configuration

- #4 Conditional provisioning by environment

- #10 Managing non-production environments

AWS Lambda charges based on memory allocation and execution time, so it’s essential to optimize both. Terraform allows you to fine-tune Lambda settings for better cost management.

- Right-sizing Memory Allocation: Terraform enables you to allocate only the necessary memory for Lambda functions, which helps reduce invocation costs.

|

1 2 3 4 5 6 7 |

resource "aws_lambda_function" "example" { function_name = "my-lambda-function" memory_size = 512 runtime = "nodejs14.x" handler = "index.handler" role = aws_iam_role.lambda_exec.arn } |

- Versions and Aliases: Use versions and aliases to control traffic and reduce unnecessary invocations, ensuring only stable versions are in production.

|

1 2 3 4 5 |

resource "aws_lambda_alias" "prod" { name = "prod" function_name = aws_lambda_function.example.function_name function_version = "$LATEST" } |

- Dead Letter Queues (DLQs): Configure DLQs for failed invocations to prevent unnecessary retries and avoid additional costs.

|

1 2 3 4 5 6 7 8 9 10 |

resource "aws_lambda_function" "example" { function_name = "my-lambda-function" memory_size = 128 runtime = "nodejs14.x" handler = "index.handler" role = aws_iam_role.lambda_exec.arn dead_letter_config { target_arn = aws_sqs_queue.lambda_dlq.arn } } |

What Are AWS Cost Optimization Tools?

AWS offers a range of cost optimization tools to help users monitor, manage, and reduce their cloud spend. These tools provide visibility into resource usage, enable budget enforcement, and recommend cost-saving opportunities.

- AWS Budgets: Allows you to set custom cost and usage budgets and receive alerts when thresholds are exceeded. This helps enforce financial discipline across teams.

- AWS Cost Explorer: Offers detailed insights and visualizations of your cloud spending patterns, helping you identify areas to optimize.

- AWS Compute Optimizer: Analyzes usage patterns and recommends optimal EC2 instance types, Auto Scaling groups, and other compute resources for cost and performance.

- Infracost: Integrates with Terraform and shows the estimated cost impact of infrastructure changes before deployment, enabling proactive cost management during development.

- Third-party tools: Solutions like ControlMonkey help manage infrastructure and cost efficiency by automating governance and continuously optimizing cloud resources.

Conclusion: Optimize AWS Costs with Terraform — Intentionally

Terraform isn’t just about provisioning infrastructure, It’s the foundation for scalable Terraform AWS cost optimization — it’s a powerful cost control tool when used with intent. By codifying best practices, automating resource lifecycles, and using modules with cost-aware defaults, teams can reduce AWS spend without compromising performance.

From EC2 to S3 to Lambda, Terraform helps you right-size, clean up, and control cloud usage — all in code.

Want to take your cost optimization further?

ControlMonkey integrates seamlessly with Terraform to enforce guardrails, detect misconfigurations, and eliminate cloud waste in real time.

👉 Book a demo and explore how ControlMonkey automates Terraform AWS cost optimization at scale